Geração do .pt

Entre em

E nele, basicamente deves pegar o ZIP (yolov11) gerado no ROBOFLOW, descompactar, instalar o ULTRALYTICS (yolo)%pip install "ultralytics<=8.3.40" supervision roboflow# prevent ultralytics from tracking your activity!yolo settings sync=Falseimport ultralyticsultralytics.checks()..após isto executar o Script!yolo task=detect mode=train model=yolo11n.pt data=data.yaml epochs=200 imgsz=640

Salve o .pt gerado

Instale o TPU MLIR e ULTRALYTICS em seu PC

"Se já instalado

docket psdocker exec -it xxxxxxx /bin/bash"

docker run --privileged --name recamera -v /workspace -it sophgo/tpuc_dev:v3.1

on /workspace

sudo apt-get update

sudo apt-get upgrade

pip install tpu_mlir[all]==1.7

git clone https://github.com/sophgo/tpu-mlir.git

cd tpu-mlir

source ./envsetup.sh

./build.sh

mkdir model_yolo11n && cd model_yolo11n

cp -rf ${REGRESSION_PATH}/dataset/COCO2017 .

cp -rf ${REGRESSION_PATH}/image .

mkdir Workspace && cd Workspace

pip install ultralytics

(crie em /dataset/ o nome de uma pasta com as imagens treinadas)

(dentro de /image/copie uma imagem das imagens treinadas)

### git clone https://github.com/Seeed-Studio/sscma-example-sg200x.git

### cd sscma-example-sg200x/scripts

### (copy into this folder the best.onnx)### dentro de /workspace/tpu-mlir/model_yolo11n/sscma-example-sg200x/scripts

### python export.py --output_names "/model.23/cv2.0/cv2.0.2/Conv_output_0,/model.23/cv3.0/cv3.0.2/Conv_output_0,/model.23/cv2.1/cv2.1.2/Conv_output_0,/model.23/cv3.1/cv3.1.2/Conv_output_0,/model.23/cv2.2/cv2.2.2/Conv_output_0,/model.23/cv3.2/cv3.2.2/Conv_output_0" --dataset ../../../../tpu-mlir/regression/dataset/BUGGIO --test_input ../../../../tpu-mlir/regression/image/Ades_2-4_jpg.rf.4de8403c125c5d16b435a839a3a93780.jpg best.onnx

dentro do /workspace/tpu-mlir/model_yolo11n/Workspace copie o best.pt (gerado no Colab)

execute

yolo export model=best.pt format=onnx imgsz=640,640

será gerado um best.onnx

execute(dentro de /workspace/tpu-mlir/model_yolo11n/Workspace)

model_transform \

--model_name yolo11n \

--model_def best.onnx \

--input_shapes "[[1,3,640,640]]" \

--mean "0.0,0.0,0.0" \

--scale "0.0039216,0.0039216,0.0039216" \

--keep_aspect_ratio \

--pixel_format rgb \

--output_names "/model.23/cv2.0/cv2.0.2/Conv_output_0,/model.23/cv3.0/cv3.0.2/Conv_output_0,/model.23/cv2.1/cv2.1.2/Conv_output_0,/model.23/cv3.1/cv3.1.2/Conv_output_0,/model.23/cv2.2/cv2.2.2/Conv_output_0,/model.23/cv3.2/cv3.2.2/Conv_output_0" \

--test_input ../../../tpu-mlir/regression/image/Ades_2-4_jpg.rf.4de8403c125c5d16b435a839a3a93780.jpg \

--test_result yolo11n_top_outputs.npz \

--mlir yolo11n.mlir

execute

run_calibration \

yolo11n.mlir \

--dataset ../BUGGIO \

--input_num 100 \

-o yolo11n_calib_table

execute

model_deploy \

--mlir yolo11n.mlir \

--quantize INT8 \

--quant_input \

--processor cv181x \

--calibration_table yolo11n_calib_table \

--test_input ../../../tpu-mlir/regression/image/Ades_2-4_jpg.rf.4de8403c125c5d16b435a839a3a93780.jpg \

--test_reference yolo11n_top_outputs.npz \

--customization_format RGB_PACKED \

--fuse_preprocess \

--aligned_input \

--model yolo11n_1684x_int8_sym.cvimodel

cvimodel se encontra em

/workspace/tpu-mlir/model_yolo11n/Workspace

\\wsl.localhost\docker-desktop\mnt\docker-desktop-disk\data\docker\volumes\8bd5aab9644ef6f95e9275c42306a33a7313a58d86c9a1a7ab20e4a24f5649aa\_data\tpu-mlir\model_yolo11n\Workspace

### o script do RECAMERA

REF:Model Conversion Guide | Seeed Studio Wiki

REF:Model Conversion Guide | Seeed Studio Wiki

Roboflow: Computer vision tools for developers and enterprises

sscma-example-sg200x/scripts/export.py at c027ed4ea14564b69530a9958150953182443126 · Seeed-Studio/sscma-example-sg200x

Monkey CVI model · Issue #37 · Seeed-Studio/reCamera-OS

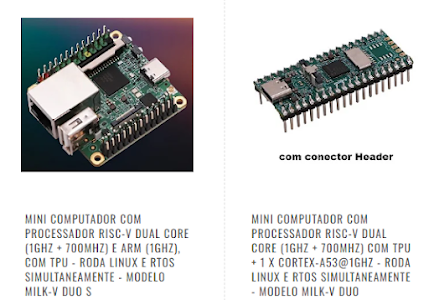

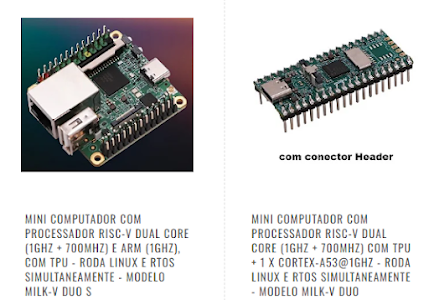

Sobre a SMARTCORE

A SMARTCORE FORNECE CHIPS E MÓDULOS PARA IOT, COMUNICAÇÃO WIRELESS, BIOMETRIA, CONECTIVIDADE, RASTREAMENTO E AUTOMAÇÃO. NOSSO PORTFÓLIO INCLUI MODEM 2G/3G/4G/NB-IOT, SATELITAL, MÓDULOS WIFI, BLUETOOTH, GPS, SIGFOX, LORA, LEITOR DE CARTÃO, LEITOR QR CCODE, MECANISMO DE IMPRESSÃO, MINI-BOARD PC, ANTENA, PIGTAIL, BATERIA, REPETIDOR GPS E SENSORES

Entre em

E nele, basicamente deves pegar o ZIP (yolov11) gerado no ROBOFLOW, descompactar, instalar o ULTRALYTICS (yolo)

%pip install "ultralytics<=8.3.40" supervision roboflow

# prevent ultralytics from tracking your activity

!yolo settings sync=False

import ultralytics

ultralytics.checks()

..após isto executar o Script

!yolo task=detect mode=train model=yolo11n.pt data=data.yaml epochs=200 imgsz=640

Salve o .pt gerado

Instale o TPU MLIR e ULTRALYTICS em seu PC

"Se já instalado

docket ps

docker exec -it xxxxxxx /bin/bash"

docker run --privileged --name recamera -v /workspace -it sophgo/tpuc_dev:v3.1

on /workspace

sudo apt-get update

sudo apt-get upgrade

pip install tpu_mlir[all]==1.7

git clone https://github.com/sophgo/tpu-mlir.git

cd tpu-mlir

source ./envsetup.sh

./build.sh

mkdir model_yolo11n && cd model_yolo11n

cp -rf ${REGRESSION_PATH}/dataset/COCO2017 .

cp -rf ${REGRESSION_PATH}/image .

mkdir Workspace && cd Workspace

pip install ultralytics

(crie em /dataset/ o nome de uma pasta com as imagens treinadas)

(dentro de /image/copie uma imagem das imagens treinadas)

### git clone https://github.com/Seeed-Studio/sscma-example-sg200x.git

### cd sscma-example-sg200x/scripts

### (copy into this folder the best.onnx)

### dentro de /workspace/tpu-mlir/model_yolo11n/sscma-example-sg200x/scripts

### python export.py --output_names "/model.23/cv2.0/cv2.0.2/Conv_output_0,/model.23/cv3.0/cv3.0.2/Conv_output_0,/model.23/cv2.1/cv2.1.2/Conv_output_0,/model.23/cv3.1/cv3.1.2/Conv_output_0,/model.23/cv2.2/cv2.2.2/Conv_output_0,/model.23/cv3.2/cv3.2.2/Conv_output_0" --dataset ../../../../tpu-mlir/regression/dataset/BUGGIO --test_input ../../../../tpu-mlir/regression/image/Ades_2-4_jpg.rf.4de8403c125c5d16b435a839a3a93780.jpg best.onnx

dentro do /workspace/tpu-mlir/model_yolo11n/Workspace copie o best.pt (gerado no Colab)

execute

yolo export model=best.pt format=onnx imgsz=640,640

será gerado um best.onnx

execute

(dentro de /workspace/tpu-mlir/model_yolo11n/Workspace)

model_transform \

--model_name yolo11n \

--model_def best.onnx \

--input_shapes "[[1,3,640,640]]" \

--mean "0.0,0.0,0.0" \

--scale "0.0039216,0.0039216,0.0039216" \

--keep_aspect_ratio \

--pixel_format rgb \

--output_names "/model.23/cv2.0/cv2.0.2/Conv_output_0,/model.23/cv3.0/cv3.0.2/Conv_output_0,/model.23/cv2.1/cv2.1.2/Conv_output_0,/model.23/cv3.1/cv3.1.2/Conv_output_0,/model.23/cv2.2/cv2.2.2/Conv_output_0,/model.23/cv3.2/cv3.2.2/Conv_output_0" \

--test_input ../../../tpu-mlir/regression/image/Ades_2-4_jpg.rf.4de8403c125c5d16b435a839a3a93780.jpg \

--test_result yolo11n_top_outputs.npz \

--mlir yolo11n.mlir

execute

run_calibration \

yolo11n.mlir \

--dataset ../BUGGIO \

--input_num 100 \

-o yolo11n_calib_table

execute

model_deploy \

--mlir yolo11n.mlir \

--quantize INT8 \

--quant_input \

--processor cv181x \

--calibration_table yolo11n_calib_table \

--test_input ../../../tpu-mlir/regression/image/Ades_2-4_jpg.rf.4de8403c125c5d16b435a839a3a93780.jpg \

--test_reference yolo11n_top_outputs.npz \

--customization_format RGB_PACKED \

--fuse_preprocess \

--aligned_input \

--model yolo11n_1684x_int8_sym.cvimodel

cvimodel se encontra em

/workspace/tpu-mlir/model_yolo11n/Workspace

\\wsl.localhost\docker-desktop\mnt\docker-desktop-disk\data\docker\volumes\8bd5aab9644ef6f95e9275c42306a33a7313a58d86c9a1a7ab20e4a24f5649aa\_data\tpu-mlir\model_yolo11n\Workspace

### o script do RECAMERA

REF:

Model Conversion Guide | Seeed Studio Wiki

Roboflow: Computer vision tools for developers and enterprises

sscma-example-sg200x/scripts/export.py at c027ed4ea14564b69530a9958150953182443126 · Seeed-Studio/sscma-example-sg200x

Monkey CVI model · Issue #37 · Seeed-Studio/reCamera-OS

Roboflow: Computer vision tools for developers and enterprises

sscma-example-sg200x/scripts/export.py at c027ed4ea14564b69530a9958150953182443126 · Seeed-Studio/sscma-example-sg200x

Monkey CVI model · Issue #37 · Seeed-Studio/reCamera-OS

Sobre a SMARTCORE

A SMARTCORE FORNECE CHIPS E MÓDULOS PARA IOT, COMUNICAÇÃO WIRELESS, BIOMETRIA, CONECTIVIDADE, RASTREAMENTO E AUTOMAÇÃO. NOSSO PORTFÓLIO INCLUI MODEM 2G/3G/4G/NB-IOT, SATELITAL, MÓDULOS WIFI, BLUETOOTH, GPS, SIGFOX, LORA, LEITOR DE CARTÃO, LEITOR QR CCODE, MECANISMO DE IMPRESSÃO, MINI-BOARD PC, ANTENA, PIGTAIL, BATERIA, REPETIDOR GPS E SENSORES

A SMARTCORE FORNECE CHIPS E MÓDULOS PARA IOT, COMUNICAÇÃO WIRELESS, BIOMETRIA, CONECTIVIDADE, RASTREAMENTO E AUTOMAÇÃO. NOSSO PORTFÓLIO INCLUI MODEM 2G/3G/4G/NB-IOT, SATELITAL, MÓDULOS WIFI, BLUETOOTH, GPS, SIGFOX, LORA, LEITOR DE CARTÃO, LEITOR QR CCODE, MECANISMO DE IMPRESSÃO, MINI-BOARD PC, ANTENA, PIGTAIL, BATERIA, REPETIDOR GPS E SENSORES

Nenhum comentário:

Postar um comentário